Mem0 and Mem0g are two new memory architectures that promise to change how AI agents remember and use past information in long conversations. Released in 2025 by the team at Mem0, these systems focus on helping large language models (LLMs) move beyond fixed context limits and become more consistent partners. Their arrival is set to have a broad impact on customer support, enterprise AI, planning, and even healthcare use cases where reliable recall of information is essential over days, weeks, or longer periods.

The launch of Mem0 and Mem0g comes at a time when enterprises are demanding smarter, more capable AI assistants that don’t forget important context from previous interactions. By focusing on dynamic extraction, consolidation, and retrieval of only key information, Mem0’s solution is designed to match how people remember – selectively and efficiently. This shift has new implications for how AI is used in business, making automation more trustworthy and less frustrating for users.

Why Memory Limitations Hurt AI Conversations

Most traditional AI agents lose context quickly because of their fixed window approach. The popular LLMs, even those with millions of tokens, cannot keep track of what happened if a conversation continues for weeks or fully jumps topic. This causes the AI to generate answers that lack coherence, ignore the past, or repeat the same mistakes. Users get frustrated when asked to repeat themselves, such as resending order numbers or explaining allergies again to a support bot or healthcare assistant.

Customers in enterprise environments especially notice when AI systems “forget” facts mid-way through a workflow or over multiple sessions. This isn’t just inefficient, it can also increase costs and even lead to unsafe or broken experiences, like giving incorrect recommendations due to missing history. Traditional methods either try to reprocess the entire chat history each time – which is slow and expensive – or use simple memory tricks that miss buried facts.

Researchers see these breakdowns as stemming from the fundamental architecture used in LLMs. They often use context windows or retrieval mechanisms that aren’t designed for truly long, dynamic, and unpredictable chats. That’s where dynamic, human-like memory is needed, and why products like Mem0 and Mem0g have emerged to fill the gap.

Context isn’t just about handling more data, it’s about handling the right data at the right time. This is the problem Mem0 aims to solve.

How Large Language Models Treat Context and Memory

Large language models, such as OpenAI’s and Google’s current LLMs, have a context window where they “see” a set number of tokens from previous messages. When conversations exceed that window, old messages get dropped, no matter how important they were. Even with expanded context window sizes, recall becomes unreliable because of attention decay – the model simply loses track of what matters as the conversation grows.

Only the most recent or most relevant pieces of conversation are usually visible to the AI. There is no native sense of persistent, growing memory. This is similar to having a conversation with someone who can only remember the last few things said, but forgets what happened last meeting, last week, or even ten minutes ago if the talk runs too long.

This design often means LLM-based agents can’t follow up on tasks that take place over separate sessions or require remembering critical details from earlier. When deployed in real-world environments, the result is an AI that feels less like a human partner and more like an easily distracted computer with a short-term memory limit.

Efforts to extend memory by feeding the model ever-larger histories aren’t perfect. They add processing cost and rarely guarantee that the important information remains accessible and prioritized. This is why new memory architectures are being developed.

The Need for Human-Like Memory in AI Agents

Humans remember selectively – they’re good at holding onto the main points and important facts while letting irrelevant details fade. For AI to function in complex business settings, the technology must do the same. AI agents with human-like memory can track user preferences, project milestones, or service issues over time and build better long-term relationships with users.

The old way – using static or simplistic memory retrieval – breaks down because work and conversations are rarely linear or single-topic. People shift priorities, introduce new problems, and expect the system to adapt. Companies that try to use AI for support or planning quickly hit the wall if the AI can’t keep up context across sessions or workflows.

Human-like memory helps applications in:

- Customer support, where agents must track long issue threads

- Personal assistants that need to recall travel or health details

- Enterprise tools that link related events, approvals, and updates

This is a crucial step for moving past “one-shot” or purely transactional AI and toward agents that act like helpful teammates. Enterprises want AI that understands, remembers, and evolves over many interactions.

What Makes Mem0 Architecture Unique

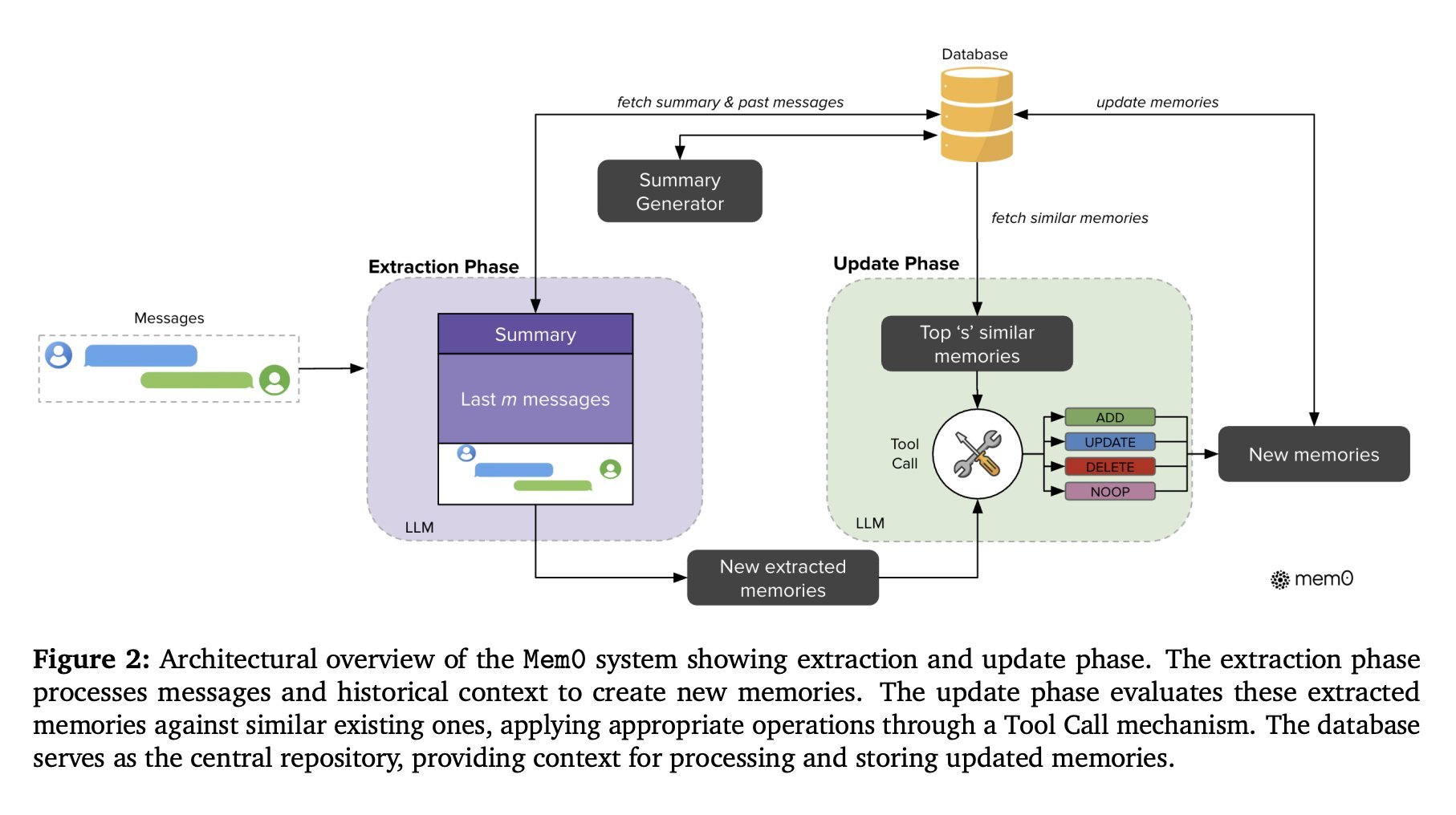

Mem0 takes a new approach by building a pipeline that both extracts and updates memories on-the-fly, capturing only the most important facts from each interaction. It uses a two-phase process – extraction and update – to process each new message exchange, ensuring only relevant data makes it into memory.

The extraction phase gathers information from the recent interaction and a running summary of the whole conversation. This summary is refreshed in the background, so the system always works with current context. Instead of storing every message or keyword, Mem0 transforms each message pair into concise memory snippets that capture just the critical details.

The update phase involves evaluating these new “candidate facts” using the LLM’s reasoning. The model checks if the fact is new, a complement to something already stored, is contradictory and needs removal, or is redundant and should be skipped. This selective process helps the AI mimic the way people remember most things but forget or ignore what’s irrelevant.

The architecture avoids the cost and confusion of reprocessing the whole conversational history each time. This design is unique compared to classical memory systems, which store too much, miss hidden facts, or require expensive searches through massive logs.

How Mem0 Dynamically Extracts and Updates Key Facts

Every time there’s a new message or reply, Mem0 looks at that pair along with a background summary to see if something should be remembered. The system uses the LLM’s strengths to “reason” about what is important – this is different from simple keyword detection or date/time memory hacks.

For each candidate fact, Mem0 asks:

- Is this a totally new fact? Add it.

- Does this complement an old memory? Update it with fresh info.

- Does it conflict? Remove the old, keep the accurate.

- Is this already covered or not important? Do nothing.

This continuous process lets AI agents evolve their memory naturally as new information comes in. By mirroring how a person would update their understanding as a conversation progresses, Mem0 gives AI the power to stay up-to-date without needing to “start over” or overload their memory window.

As a result, AI assistants using Mem0 can finally recognize returning users, preserve project context, and smoothly respond over long periods – solving major pain points for many businesses.

Selective Recall: Avoiding Latency and Information Overload

One of the biggest benefits of Mem0 and its design is efficiency. Traditional methods that depend on huge context windows force the AI to scan through long, mostly irrelevant text. This introduces extra latency for every answer and cost for every token passed into the model. Mem0 keeps chatbots and assistants responsive by only recalling “high-value” facts.

This low-latency, less expensive approach means:

- Quicker responses in real-time scenarios

- Less “noise” from irrelevant messages

- Lower infrastructure costs for enterprises

The extraction and update process ensures memory is always fresh, concise, and tailored to the conversation’s needs. When the AI responds, it’s not overwhelmed by old details that no longer matter. Instead, it works with a clear set of facts that have been curated for relevance.

For users, this means a chatbot that doesn’t slow down or shrug off their requests. For companies, it cuts costs while making the service feel smarter and more human.

By giving up on overfeeding the model and focusing on memory quality, Mem0 demonstrates the value of selective recall.

Inside Mem0g: Graph-Based Memory Representation

Mem0g builds on Mem0 by adding a layer of graph-based memory, which is especially helpful for conversations that are complex or involve many relationships. This system creates a graph structure where entities – people, places, actions – become nodes, and their relationships turn into edges. This mirrors how humans organize and link ideas in their minds.

For example, a travel planning AI needs to remember connections between cities, dates, and activities. Mem0g’s graph allows it to “see” that a user’s favorite hotel is in a city visited last year, or that their return flight links to a specific calendar event.

Mem0g starts by extracting key entities from a message. Then it finds and records relationships, such as “lives in,” “booked flight,” or “prefers window seat.” This richer, interconnected structure lets the AI do more advanced reasoning, linking distant facts for deeper answers.

This architecture shifts AI memory from a simple list of notes to an organized graph that can power relational, multi-step, or cross-topic answers – essential for sophisticated enterprise apps and digital teammates.

With Mem0g, AI agents can navigate complex knowledge, not just recall facts.

Entities and Relationships in Mem0g

Entities are the building blocks of Mem0g’s system. These include people, places, objects, concepts, and events. The AI must accurately recognize and tag these elements in order to build a useful memory map.

Once entities are identified, Mem0g’s relationship generator module goes to work. It analyzes the message to spot how entities relate – like “Alice approved budget” or “meeting scheduled at noon.” These relationships are expressed as “triplets,” a classic structure in knowledge graphs:

- (Person, Action, Object)

- (Company, Employs, Person)

- (City, Hosts, Event)

These triplets become the edges that connect nodes in the memory graph. The result is a living map of facts that can be searched, updated, and reasoned over. This makes it easier for AI to answer questions that require tracing connections, not just recalling single facts.

This organized memory is uniquely suited to business applications, where information is often tightly linked and changes over time. By structuring memories as graphs, Mem0g makes even complex workflows traceable and manageable.

Conflict Detection and Resolution in AI Memory Graphs

When new information conflicts with what’s already stored in the memory graph, Mem0g is designed to spot and deal with this. Its conflict detection function checks if a fresh fact disagrees with an existing relationship. For example, if two records say different people approved a project, one must be corrected or flagged for review.

Mem0g doesn’t just replace the old memory – sometimes, history matters, like when tracking evolving preferences or multi-step plans. The system uses logic to update or resolve conflicts, preserving context and accuracy.

This is critical in environments where mistakes can have real consequences, such as healthcare, finance, or legal workflows. Incorrect recall or unresolved contradictions could lead to confusion or risk, so robust conflict handling is essential for trust and reliability.

With Mem0g’s conflict management, companies can ensure their AI teammates are working with the most accurate and current information available. This enables agents to build stronger relationships and make fewer errors over time.

Performance Results on the LOCOMO Long-Term Benchmark

Mem0 and Mem0g were rigorously evaluated using the LOCOMO benchmark, a dataset designed to test long-term conversational memory. This benchmark assesses the AI’s ability to remember, reason, and respond coherently across extended conversations.

Also Read

Square Enix Symbiogenesis Expands on Sony Soneium Blockchain

Researchers measured several aspects:

- Accuracy on single-hop and multi-hop questions

- Performance on temporal and open-domain queries

- Token consumption and response speeds

A unique aspect of the evaluation involved having a separate LLM judge the quality of responses. This “LLM-as-a-judge” method is gaining popularity as an objective way to score the practical value of AI outputs.

The benchmark results clearly showed that Mem0 and Mem0g either outperformed or matched every existing memory architecture tested. Their biggest wins were in reducing latency and operational costs while maintaining or improving coherence in answers.

Latency, Token Costs, and Accuracy: Comparing Mem0, Mem0g, and Alternatives

One of the standout points in head-to-head comparisons is efficiency. Mem0 achieves up to 91 percent lower latency and over 90 percent token cost savings over traditional full-context approaches. Meanwhile, Mem0g keeps a strong edge on more complex, relational, or time-based tasks, showing substantial gains over other knowledge-graph and memory management solutions.

Also Read

Last Chance to Exhibit at TechCrunch AI Sessions at Berkeley

The team looked at a wide field of baselines for these comparisons:

- Classic memory-augmented LLM methods

- Various Retrieval-Augmented Generation (RAG) systems

- Feeding entire conversations (full-context)

- Open-source memory solutions

- OpenAI’s proprietary ChatGPT memory feature

- Standalone memory management platforms

Across question types, from simple recall to challenging multi-hop reasoning, Mem0 and Mem0g performed as well or better – but with lower response delays and a smaller compute footprint. This is a strong validation that focusing on selective, structured memory pays off, especially at enterprise scale.

For businesses, this means more affordable deployments and a better user experience, as every interaction feels faster and smarter.

How Mem0 and Mem0g Outperform Existing Memory Systems

Unlike older methods that try to retrieve every past exchange or depend on raw keyword matches, Mem0 and Mem0g focus sharply on storing only what is essential. They avoid “memory bloat” – and the sluggish results that come with it – making them practical for continuous, real-world use.

Also Read

Florida Encryption Backdoor Bill for Social Media Fails to Pass

Here’s where the new designs shine:

- Mem0’s fact-based memory supports very fast recall, even under 150ms

- Mem0g’s relationship graph enables true reasoning across complex knowledge domains

- Both dynamically update and prune their memory, keeping information fresh and relevant

As a result, end users interact with AI that remembers prior details, avoids repeating mistakes, and can handle multi-session or multi-agent workflows. This leads to better issue resolution, smoother project tracking, and more meaningful conversations with digital helpers.

The architectural improvements allow Mem0 and Mem0g to go beyond merely catching up with context – they help AI agents deliver higher-value service, day after day.

When to Use Mem0 Versus Mem0g: Application Tradeoffs

The choice between Mem0 and Mem0g depends on what the application needs. Mem0 works best for fast, simple recall – remembering names, single facts, one-off decisions. Its memory structure is plain-text snippets, making it lightweight and fast. This is ideal for customer chatbots, personal helpers, or any use where speed is critical and relationships between facts are simple.

Also Read

Apple’s New Chips Target Smart Glasses, Macs, and AI Hardware

Mem0g is more suited for situations that demand relational or temporal reasoning. If the AI must answer questions like “Who signed the contract last Tuesday?” or “How have these decisions connected over several meetings?” – or track workflows across many touchpoints – the richer graph-based memory pays off.

The tradeoff is a slight increase in processing time, but the gain is much deeper understanding and more sophisticated answers. Enterprises deploying large digital teams, multi-step process automation, or industry copilots should consider Mem0g for maximum impact.

Knowing when a simple memory system suffices and when graph power is needed is key to fitting the right tool to the job.

Building Reliable Enterprise AI Agents with Long-Term Memory

For many companies, moving AI from experimental tool to critical business teammate depends on one thing: trust. Reliability in remembering, updating, and connecting information is core to building that trust. Mem0 and Mem0g’s architectures allow AI copilots and digital agents to finally meet the bar set by demanding users and enterprise leaders.

Also Read

Widespread Timeline Issues Hit X as Users Report Outages

When AI can pick up a conversation from weeks before, follow complex policy decisions, or maintain a running “sense of self,” businesses save time, avoid mistakes, and deliver stronger value to customers. The unpredictable, multi-topic, cross-session reality of business is no longer a barrier.

More enterprises are deploying digital teammates for roles as varied as planning, HR, customer care, and project management. With scalable memory, these agents can maintain context, personalize service, and become truly useful additions to human teams.

The result is a new standard for conversational AI: not just “smart enough for now,” but strong enough to keep evolving alongside user needs.

The Future of Conversational Memory and Autonomous Agents

As the field moves forward, selective, structured memory will become a defining feature of advanced AI agents. The success of Mem0 and Mem0g highlights this trend – AI is being asked to do more, recall more, and serve for longer periods than ever before.

Also Read

Zen Agents by Zencoder: Team-Based AI Tools Transform Software Development

Future developments may bring even deeper memory structures, allowing for richer human-like reasoning and recall. This includes self-healing memory graphs, emotion tracking, or automatic summarization of key themes that emerge over time. The line between AI and human teammates will blur further, making trust, coherence, and reliability vital pillars for adoption in business and beyond.

Solutions like Mem0 and Mem0g provide a preview of the next generation of conversational AI, where context is never lost and every agent can build intelligent, evolving relationships.

For enterprises, the lessons are clear: invest in AI that remembers like a partner, not a short-term assistant.