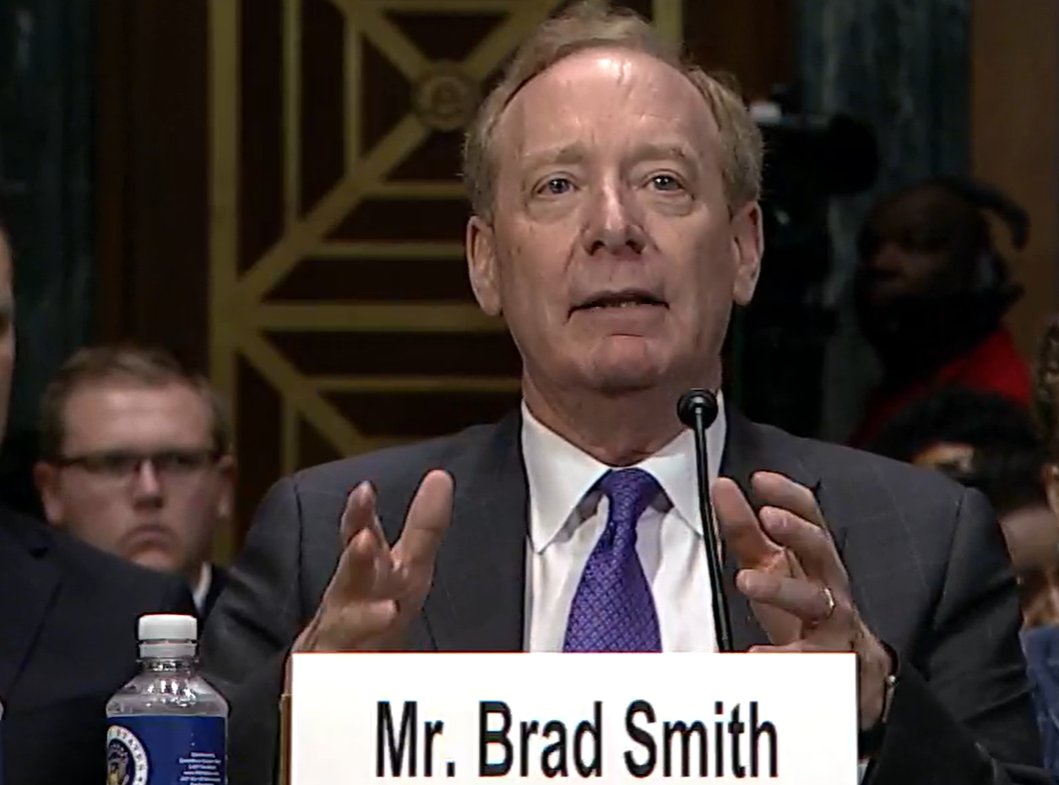

On May 8, 2025, Microsoft made headlines when its vice chairman and president, Brad Smith, revealed at a Senate hearing that all Microsoft employees are banned from using the DeepSeek app. The ban was enforced due to concerns about data security and fears over potential propaganda. This is the first time Microsoft has publicly confirmed it has blocked DeepSeek, an AI-powered chatbot tool available on both desktop and mobile platforms, from both use by its workforce and inclusion in its official app store. The move highlights growing anxiety in the tech industry about data privacy and geopolitical influence in artificial intelligence platforms.

DeepSeek, which is operated by a Chinese company, has become a focal point for privacy and censorship debates in the tech world. While other organizations and even governments have set restrictions, Microsoft’s public stance signals a new phase of open scrutiny for global AI tools. The disclosure also comes at a time of rapid AI adoption and increasing regulatory attention on the security of employee data in large technology firms.

Microsoft’s Ban on DeepSeek: What Happened

Microsoft’s decision to bar its employees from using DeepSeek stems from both practical security concerns and broader ethical considerations. Brad Smith stated clearly at the Senate hearing that the DeepSeek app is not allowed for internal use at Microsoft and also will not be present in the Windows app store. This policy spans all devices, including desktop and mobile, wherever Microsoft employees work.

Microsoft’s leadership pointed to the core issue: the inability to guarantee that sensitive corporate or customer data would not be accessible to foreign servers operated under different legal and political regimes.

While DeepSeek is popular and widely used globally, Smith’s public comments about the ban reflect a proactive stance in risk mitigation, especially given the worldwide focus on AI model transparency and accountability.

It’s worth noting that this ban goes beyond the usual app restrictions, as Microsoft has typically allowed a range of AI chatbot competitors in its ecosystem.

The move is meant to reassure clients and partners that Microsoft takes privacy and organizational risk seriously, even if it means foreclosing access to innovative, competitive products when they raise red flags around data use or influence.

Brad Smith’s Senate Testimony: Key Statements

At the U.S. Senate hearing, Brad Smith was explicit: “At Microsoft we don’t allow our employees to use the DeepSeek app.” He underscored that the app’s data practices and risk of spreading “Chinese propaganda” were the main issues. The topic was front and center as lawmakers questioned leaders in the tech sector about the national security implications of foreign AI technology.

The hearing transcript shows that Smith laid out Microsoft’s reasons for transparency and highlighted steps already taken within the company to protect intellectual property and personal data.

Smith also clarified that this isn’t just about DeepSeek as a competitor, but a broad company policy applied for any tool seen as a tangible risk in the current geopolitical climate. He differentiated this ban from simple business rivalry, tying it back to Microsoft’s obligation to its stakeholders.

He told the Senate committee that the company’s actions were part of a wider commitment to American technology leadership and responsible innovation.

Smith’s testimony attracted attention from policymakers and media alike, shining a light on the struggles multinationals face when dealing with cross-border apps and rising data sovereignty concerns.

DeepSeek’s Data Storage in China and Privacy Concerns

Central to Microsoft’s ban is DeepSeek’s practice of storing user data on servers located in China. According to DeepSeek’s privacy policy, all data, including queries and interactions, are processed and kept on infrastructure that falls under Chinese jurisdiction.

This jurisdictional issue is key. Data on Chinese servers is governed by the country’s cyber and national security laws, making it accessible to authorities with little notice. For Microsoft, a global enterprise with strict obligations to clients and regulators, this presents an unacceptable risk that confidential or sensitive information could be intercepted or misused.

The policy makes it clear: user data is subject to Chinese regulations at every level of storage and processing, with little recourse for foreign users if a dispute or leak occurs.

Microsoft leaders cited this risk in their reasoning, arguing that security cannot be ensured if data enters cloud environments where U.S.-based or European protections do not apply and audit mechanisms are out of reach.

Chinese Law and Cooperation With Intelligence Agencies

One of the starkest concerns involves legal requirements in China that compel companies like DeepSeek to cooperate with local intelligence agencies. According to Chinese law, if authorities request user data, providers must comply. For multinational corporations, this is a significant issue.

The Chinese law is detailed, direct, and enforces cooperation in the name of state security. Any user data processed on Chinese servers could, at any time, be accessed by the government if it is deemed relevant to intelligence or national interests.

This undermines most international standards for data sovereignty and data privacy, and it means Microsoft cannot guarantee that even employee or partner data is safe when processed by DeepSeek’s application.

Other governments, including the U.S. and those in Europe, have developed strict frameworks for data handling and cross-border transfers. In this environment, the risk that data will be accessed under less stringent legal conditions is seen as a non-starter for many organizations.

Microsoft’s stance reflects these differences in legal frameworks and the global trend toward localized, sovereign data storage to avoid these exact conflicts.

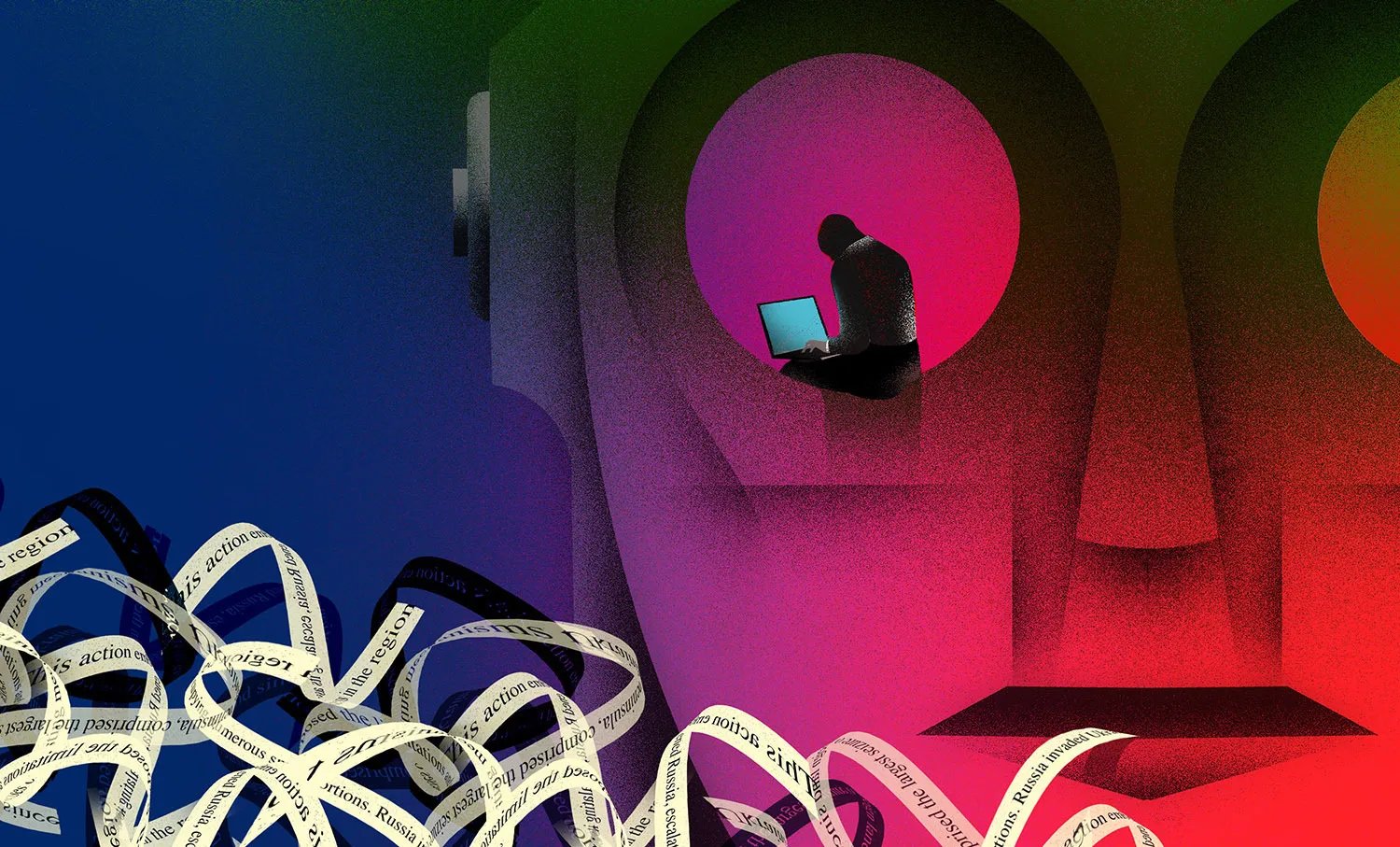

Propaganda Fears and Censorship of Sensitive Topics

Brad Smith also mentioned the risk that DeepSeek’s answers could be shaped – or censored – by guidelines set by the Chinese government. DeepSeek is known to filter and restrict answers to questions it considers politically sensitive, which aligns with the censorship routinely imposed within China’s internet environment.

This raises the issue of propaganda dissemination. Organizations that depend on objective, uncensored information worry that foreign influence could seep in through responses to everyday queries and workplace research.

Microsoft’s leadership has pointed to the possibility that DeepSeek’s operational rules make it susceptible to manipulation, whether subtle or overt. The concern is not only the loss of informational freedom, but the potential for misinformation to enter the tech workforce and influence decision-making inside a global company.

DeepSeek’s reputation for controlling conversation topics and avoiding “sensitive” political realities is a direct threat to open discourse – a fundamental value for Microsoft and its American and European clients.

The company’s actions underscore the rising trend of vetting AI models not just for accuracy, but for ideological independence.

Microsoft’s App Store and DeepSeek’s Absence

Despite DeepSeek’s popularity, Microsoft has chosen not to include the app in its official Windows app store. This is notable because Microsoft often permits a wide range of AI chatbot competitors on its platform, suggesting the DeepSeek case is unique in how seriously it’s taken.

Microsoft’s reasoning is straightforward: it will not distribute or facilitate software that poses a security or reputational risk. This blanket exclusion reflects how seriously Microsoft treats third-party software reviews and compliance evaluations.

Apps like Perplexity remain available, and even some services from direct rivals are not excluded on principle – unless they violate clear safety or privacy rules.

The absence of DeepSeek also sets a precedent. It tells other app developers that compliance with stringent privacy standards and trust-building policies is non-negotiable if they want access to Microsoft’s massive user base.

Comparison With Other AI Chatbots and Competitors

DeepSeek’s exclusion stands out when compared to other AI chatbot tools, such as Perplexity or competitors from Google. While Microsoft bans DeepSeek, Perplexity, for instance, is still available on the Windows app store. This suggests that Microsoft is willing to host third-party chatbot competitors, so long as they don’t present heightened security or ideological influence concerns.

Interestingly, Microsoft also doesn’t feature Google’s apps like Gemini or Chrome in its webstore, though this absence seems less rooted in security and more in standard platform competition. The comparison highlights that DeepSeek is virtually alone in being both a direct competitor and a subject of geopolitical scrutiny strong enough to provoke an outright ban.

This signals that for Microsoft, the biggest risks with DeepSeek are the intersection of data privacy, compliance with Chinese law, and the possible spread of propaganda via censored answers, rather than typical commercial rivalry. The goal isn’t simply to stifle a competitor but to draw a line where trust and transparency are in doubt.

Such actions set a new bar for how tech companies handle highly scrutinized or black-box AI models versus established, more transparent alternatives.

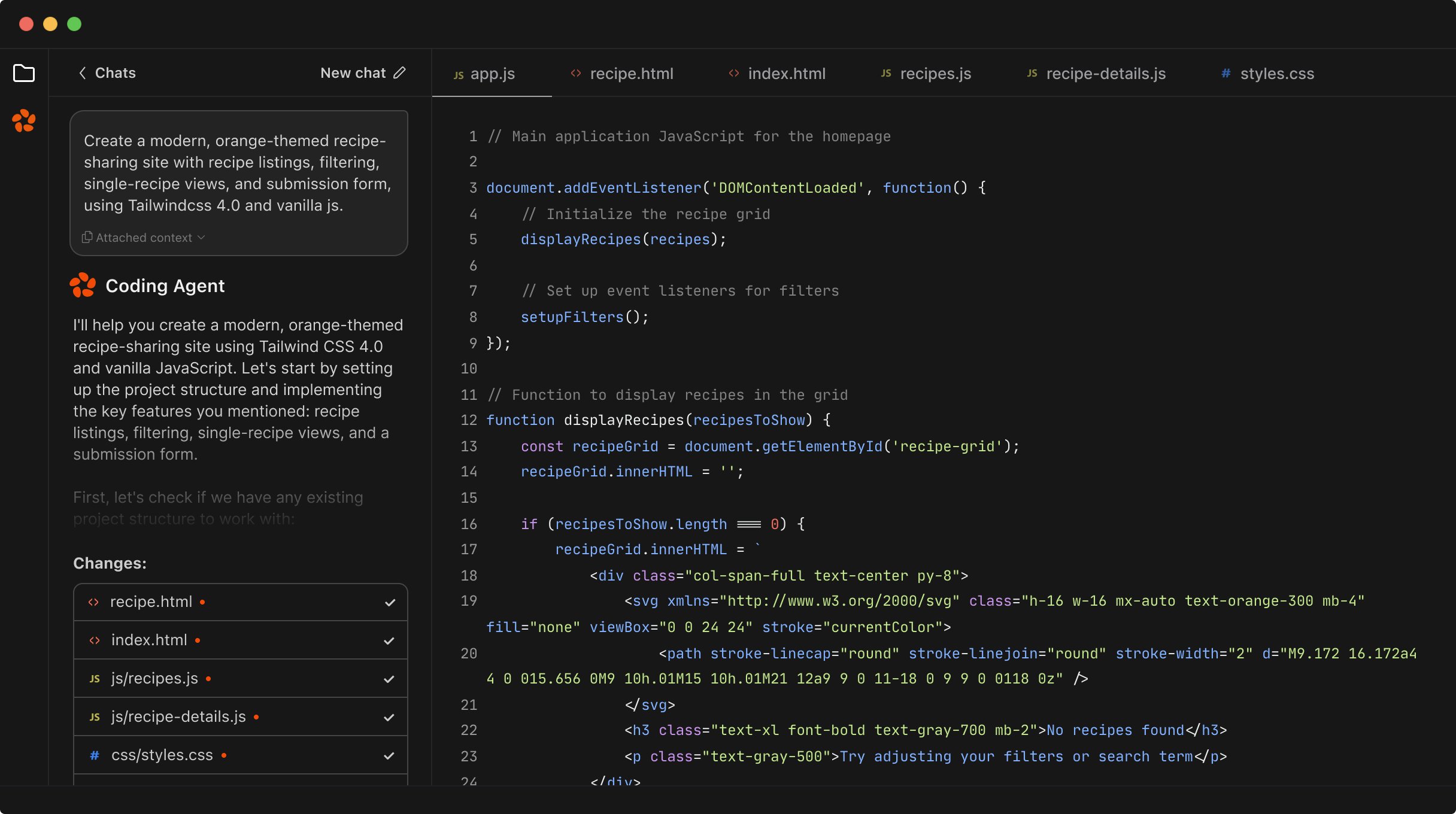

DeepSeek’s Open Source Nature and Azure Hosting

One unique element of DeepSeek is that its core model is open source. Anyone can download the model, host it on their own servers, and deliver services independently of DeepSeek’s central infrastructure. This means organizations can, in theory, avoid sending data back to China by running the model themselves.

Microsoft has even allowed DeepSeek’s R1 model to be used on its Azure cloud service, as reported in their official blog. However, this is very different from allowing the original DeepSeek app, which directly connects to Chinese servers, onto devices or the app store.

The key distinction is who operates the model and where data is stored – not just what code is running. Microsoft’s approach shows a clear line: hosting the AI for enterprises to run in compliant environments is permitted, but using the official app is not, because it frames data protection and model governance as critical responsibilities.

Red Teaming and Safety Evaluations for DeepSeek on Azure

Before rolling out DeepSeek’s R1 model to Azure, Microsoft says that the product underwent “rigorous red teaming and safety evaluations.” Red teaming is a process where security teams simulate attacks or misuse scenarios to identify risks and flaws in the system before broad deployment.

According to Microsoft’s public statements, DeepSeek’s model was only made available after experts closely reviewed it for potential side effects, such as spreading misinformation or generating unsafe code. This is especially important for open source models, which can be modified and run in environments that the original developers no longer control.

The commitment to independent safety evaluations and red teaming is a central plank in how Microsoft approaches “high-risk” models and highlights the due diligence that goes into bringing such technology to enterprise users.

Also Read

Square Enix Symbiogenesis Expands on Sony Soneium Blockchain

Microsoft’s Modifications to the DeepSeek Model

While discussing DeepSeek at the Senate hearing, Brad Smith revealed that Microsoft had gone inside the DeepSeek model to “change” it and remove what he described as “harmful side effects.” The company did not elaborate on the specific technical changes, but the statement signals a hands-on effort to audit and refine foreign AI systems before making them accessible on U.S. infrastructure.

This process aligns with industry best practices, in which potentially unsafe or biased AI models are subject to layers of review and, if necessary, direct modification to align with strict corporate or regulatory standards.

Microsoft’s willingness to rework or constrain open-source models before deployment is a key way the company differentiates responsible innovation from simply distributing the latest technology as-is. The company’s focus is on limiting risk, even as it supports a diverse cloud ecosystem.

Transparency about such interventions is expected to become more common as AI supply chains intermingle with open- and closed-source code maintained by teams in many jurisdictions.

Also Read

Last Chance to Exhibit at TechCrunch AI Sessions at Berkeley

The Broader Trend: Organizations and Governments Restricting DeepSeek

Microsoft is not alone in restricting or banning DeepSeek. Many other organizations and countries have also imposed rules that limit or prevent use of the app, mainly because of the same concerns: data sovereignty, compliance with local laws, and the risk of foreign influence in internal decision-making.

This broader trend reflects a shift toward putting trust and provenance first in selecting enterprise technology tools. The move to ban DeepSeek openly signifies that barriers are going up as global technology companies tighten control of third-party digital services to side-step legal pitfalls and public scrutiny.

For governments, similar bans are part of efforts to assert national strength in digital infrastructure and to harden against perceived or real threats from cross-border intelligence gathering and propaganda campaigns.

As more state and private-sector actors make this shift, international discussions over mutual standards and auditability are likely to intensify, further shaping the future landscape of global AI deployment.

Also Read

Florida Encryption Backdoor Bill for Social Media Fails to Pass

DeepSeek’s Privacy Policy and Data Handling Practices

DeepSeek’s public privacy policy lays out its data handling practices in detail. All user data, including text interactions and logs, are processed and stored on servers located inside China. This approach raises a red flag for companies concerned with client confidentiality and legal compliance outside China.

The terms specify that the information is managed in accordance with Chinese cybersecurity and privacy requirements, which directly conflict with the stricter frameworks seen in regions like the EU and US. For companies operating in regulated spaces, this mismatch can be a deal-breaker.

Microsoft cited these policies as incompatible with its own mandatory protections and with the transparency expected by large-scale enterprise or government customers. DeepSeek’s model of data retention and disclosure may be normal in its home market, but is a barrier to wider global adoption as regulatory scrutiny rises worldwide.

These issues have only become more important as AI capabilities expand and organization adoption accelerates.

Also Read

Apple’s New Chips Target Smart Glasses, Macs, and AI Hardware

Potential Security, Propaganda, and Code Generation Risks

The risks that concern Microsoft include security flaws, the spread of propaganda, and the possibility that the AI could generate insecure code or misleading information. Propaganda concerns are especially acute for tools that process large volumes of employee queries daily and influence company knowledge bases or work outputs.

There is also the technical risk: AI models trained or managed primarily in one country may embed assumptions, datasets, or vulnerabilities unknown to or unchecked by foreign companies. This could cause problems ranging from subtle misinformation to outright security breaches if code or advice is mishandled.

Because these issues have real-world consequences, Microsoft has prioritized keeping all possible points of failure – whether technical or political – under careful scrutiny. The combination of technical, legal, and ideological risks means the company is not willing to gamble on DeepSeek’s trustworthiness for internal operations.

Why Microsoft Disclosed the Ban Publicly Now

This Senate hearing marked the first time Microsoft openly discussed its DeepSeek ban. The timing is notable. With AI spending and adoption ramping up, and lawmakers turning up the pressure for transparency in tech, Microsoft is making a clear signal about its values and risk management priorities.

Also Read

Widespread Timeline Issues Hit X as Users Report Outages

By speaking publicly, the company can demonstrate to regulators, shareholders, and the public that it is staying ahead of potential problems, not reacting after a breach or controversy. This level of honesty also helps Microsoft frame itself as a responsible partner for governments and businesses navigating the uncertain territory of foreign AI vendors.

The move fits a broader industry pattern where companies are proactively stating their red lines and policies, rather than waiting for scandal to force their hand. It also lets other companies know what kinds of due diligence will be needed in the near future if they want to do business with or within major corporations like Microsoft.

Reactions From the Tech Community and Policy Makers

The tech world has watched Microsoft’s stance with a mixture of approval and anxiety. Many see the move as prudent, especially amid growing fears of influence operations and data exposure through foreign technology providers. Policy analysts suggest it’s an important step toward stronger norms for AI accountability and user privacy.

Some critics point out that major app bans can deepen the digital divide, with users in different regions getting access to different levels of service, transparency, and innovation. However, others argue that these divisions already exist behind the scenes, so clarity is beneficial for everyone.

Also Read

Zen Agents by Zencoder: Team-Based AI Tools Transform Software Development

From lawmakers’ perspectives, Microsoft’s testimony and actions serve as a wakeup call about the fast-changing nature of software supply chains and the need for updated laws to govern AI use in sensitive environments.

Industry experts expect this public debate to continue, and for more companies and agencies to update their own software vetting rules in the months ahead.

The Future of AI Chat Platforms Amid Geopolitical Tensions

Microsoft’s ban on DeepSeek underscores a growing challenge: as AI tools become more central to business and daily life, who gets to control and access these systems becomes a matter of national and corporate security as much as technical innovation.

For AI chat platforms, transparent governance, location of data storage, and clear auditing procedures will be more crucial than ever to win trust internationally. As tensions between tech superpowers persist, the push to build alternatives that meet the privacy and operational standards of each region is only increasing.

Expect more scrutiny of cross-border AI, more rigorous public vetting, and a growing demand for models and apps that can prove their independence from any single government’s influence. Microsoft’s move sets a standard others will be measured by for the foreseeable future.