OpenAI announced on May 9, 2025, that enterprises can now use reinforcement fine-tuning (RFT) to tailor the o4-mini reasoning model on the company’s developer platform. This major update gives third-party organizations new control to develop private, custom versions of the o4-mini model tuned for specific business uses. Developers can instantly deploy these models through OpenAI’s API and integrate them into internal tools.

This move signals OpenAI’s intent to deepen enterprise adoption of its latest language models – offering customization for unique data, internal processes, or proprietary language. With real-world use, RFT promises more relevant, context-aware results, but organizations should also be aware of its technical caveats and costs, which are detailed below.

OpenAI Expands Fine-Tuning Options for Enterprises

OpenAI is broadening its enterprise offerings by rolling out reinforcement fine-tuning for the o4-mini model, giving verified organizations the freedom to adapt language tools for internal needs. This includes making models that better fit in-house data, communication styles, and operational policies.

With the new RFT feature, more industries can develop models that speak their language, understand their product ecosystem, and reflect their compliance requirements. Companies using OpenAI now have improved flexibility compared to one-size-fits-all public models.

Alongside RFT, OpenAI also supports supervised fine-tuning for the faster and more affordable GPT-4.1 nano model. Both updates signal OpenAI’s focus on enterprise growth and strengthening its ecosystem of developer tools.

Enterprise adoption is driven by a need for trustworthy, context-sensitive tools, given that pre-trained models might miss proprietary terms or policy nuances. These new fine-tuning options meet that demand straight from the platform dashboard.

What Is Reinforcement Fine-Tuning (RFT) for o4-mini?

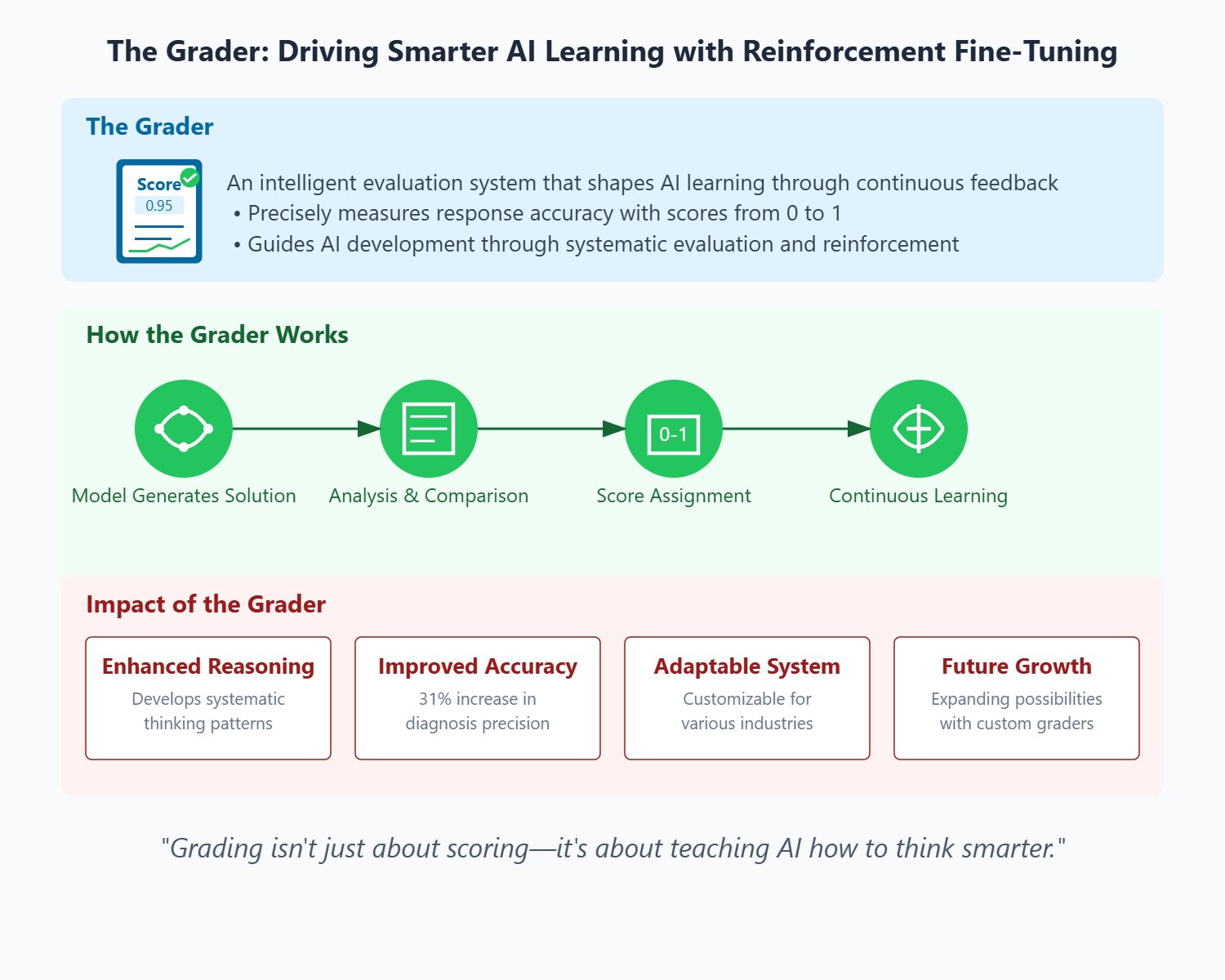

Reinforcement fine-tuning, or RFT, is a process that lets enterprises create a custom version of OpenAI’s o4-mini reasoning model based on their unique requirements. Instead of learning from fixed answers, RFT uses a scoring function to scale model outputs in line with company-specific standards or goals.

Unlike standard fine-tuning, RFT operates in a loop: prompts are scored by graders – either custom or model-based – and then the model is updated to favor outputs that score higher. This results in tailored, goal-driven performance unique to each business’s needs.

The method gives organizations control to enforce internal language, policy compliance, or industry regulations. Key tasks like company-specific code, document processing, and content moderation see immediate value from models trained via RFT.

RFT is now available for OpenAI’s o4-mini model, with support limited to this o-series reasoning model as of the latest announcement.

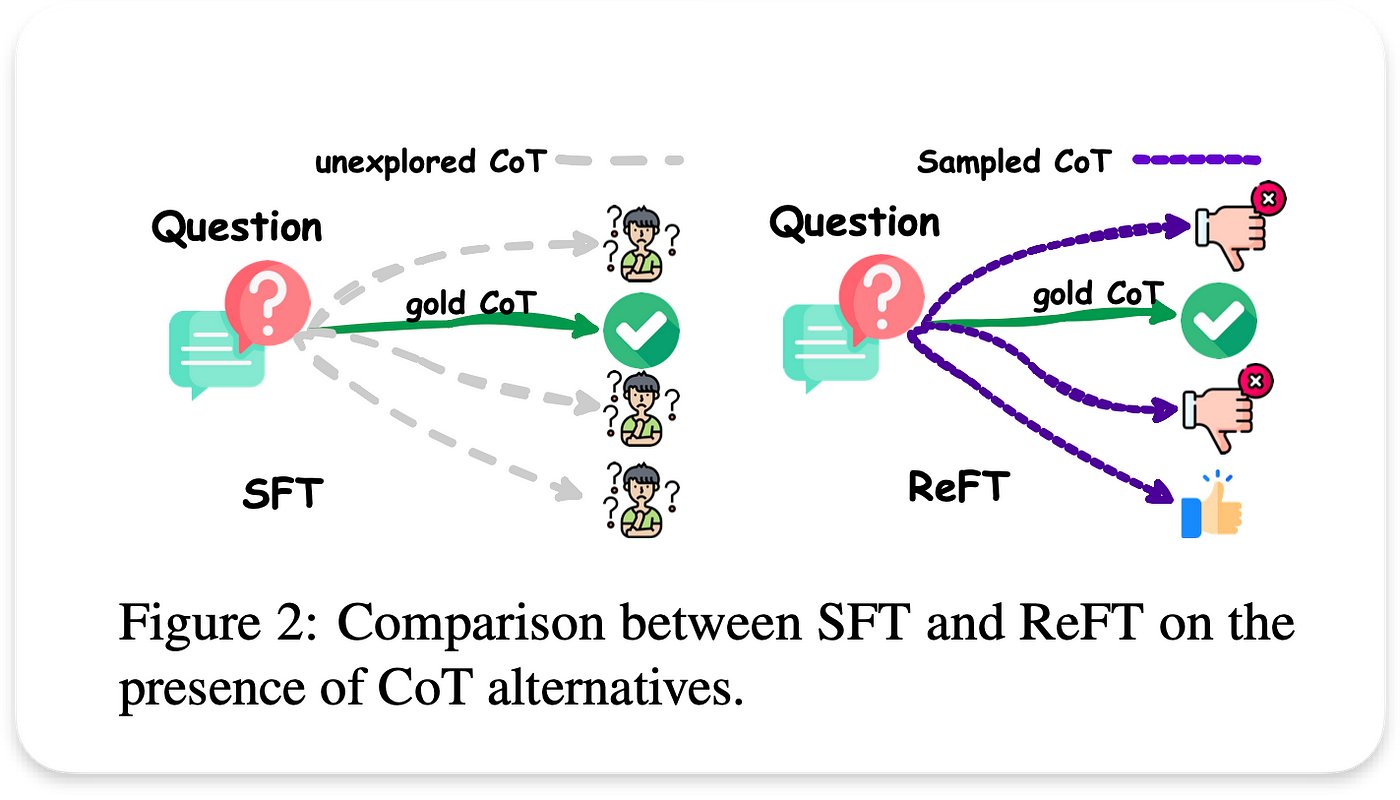

How RFT Differs from Supervised Fine-Tuning

Traditional supervised fine-tuning (SFT) uses a dataset with clear input/output pairs and fixed correct answers. This makes SFT well-suited to problems where right and wrong answers are clearly defined and the task goals are simple to measure.

Reinforcement fine-tuning, in contrast, works by introducing a grader or scoring mechanism that evaluates multiple possible responses for each prompt. These scores drive more nuanced model adjustments and allow for adaptation to less rigid objectives, such as policy compliance or house style.

With RFT, organizations can guide a model’s tone, safety, or technical accuracy even when the “correct” answer is complex or subjective. This is often vital for high-stakes enterprise use cases where subtlety matters.

Another big distinction is in feedback loop design: RFT continually tweaks model responses based on evolving criteria. SFT is fundamentally static, while RFT is dynamic, learning as your business rules or goals shift.

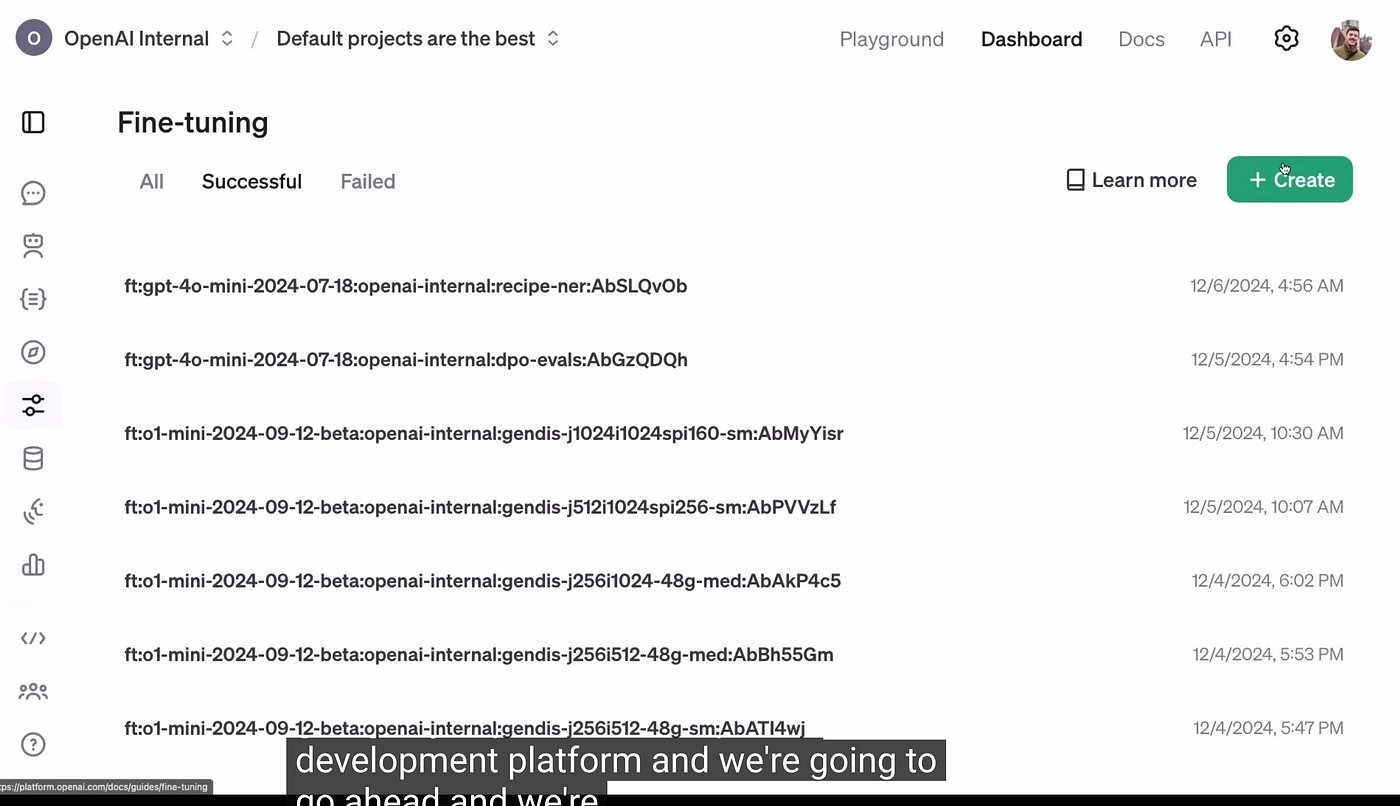

Key Features of OpenAI’s RFT Platform

OpenAI’s RFT platform includes an online dashboard and API for configuring, running, and monitoring reinforcement fine-tuning jobs. It brings several features to the table for developers and organizations:

- Grading customization: Define your own grading function or use OpenAI-based graders.

- Dataset uploads: Use organization-specific prompts, with built-in validation split support.

- Dashboard monitoring: View progress, checkpoints, and training job logs directly via the interface.

- API control: Full developer API connectivity for custom deployment and workflow automation.

These features enable heavy customization and tight integration with existing company systems. Real-time feedback during model training helps organizations adjust grading logic or data curation as they fine-tune results.

The platform’s tight control and visibility make it especially useful for teams with regulatory, compliance, or technical accuracy needs.

Steps to Create a Custom RFT Model for Your Organization

The process of creating a custom RFT model involves several hands-on steps tailored for both large enterprises and independent developers. Each step puts a premium on data quality, precise goals, and thoughtful grading.

- Define your task and objectives

- Create or refine a grading function – this can be your own code or use a model grader

- Upload a prepared dataset with prompts relevant to your tasks, including validation splits for later performance checking

- Configure a training job via dashboard or API, setting parameters such as batch size or grading logic

- Start the job and monitor progress, reviewing checkpoints and making adjustments as your model learns

During each training cycle, validation checks and progress reviews ensure the model is converging on your desired behaviors, not drifting or overfitting.

Iterate as needed on data or grading scripts, especially if your initial output doesn’t meet internal standards. Continuous monitoring and checkpointing give you the power to pause and rework jobs as needed for optimal results.

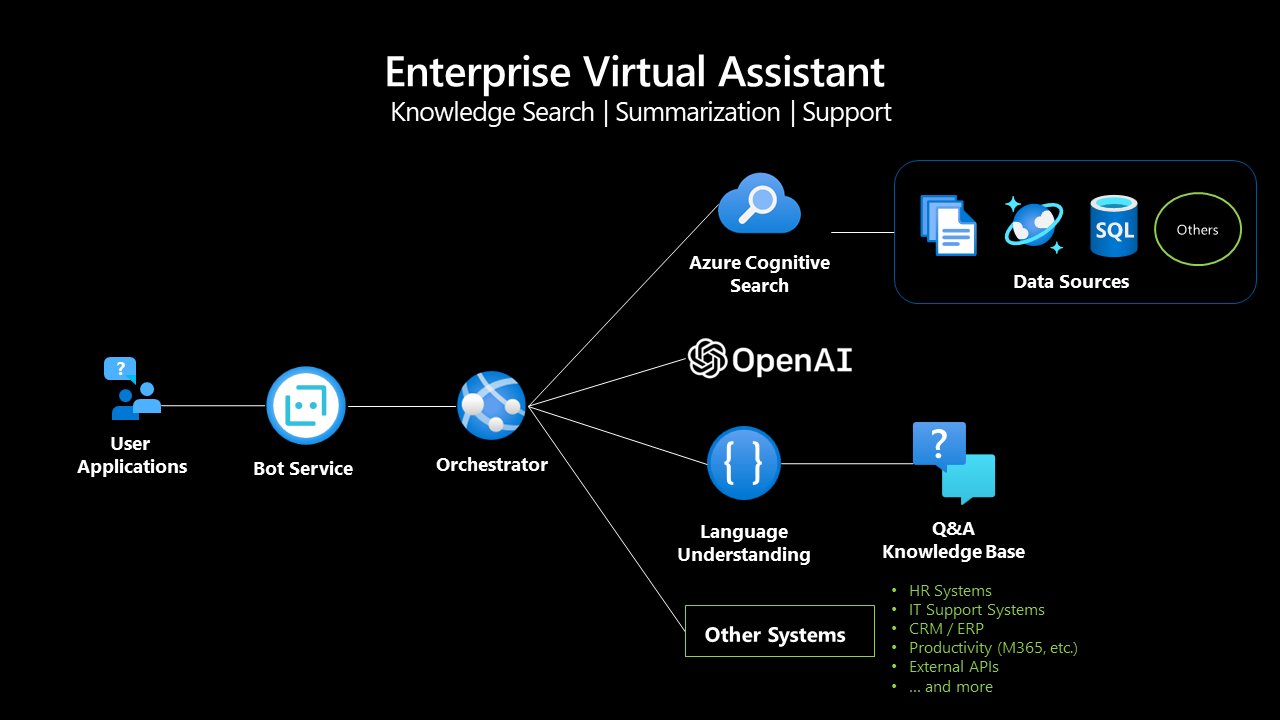

Deploying and Integrating Your Fine-Tuned Model

After your RFT model is trained, it can be deployed instantly using OpenAI’s API. Organizations can wire their custom o4-mini model directly into private internal apps, employee chatbots, product tools, or analytics dashboards.

- Deploy quickly via API endpoints provided by OpenAI

- Connect to internal knowledge bases, search engines, employee software, or customer support tools

- Integrate with custom GPTs to fetch proprietary company data or automate internal document handling

This deep integration means leaders and staff can access compliant, ready-to-use enterprise intelligence safely and privately – directly from familiar internal tools.

Many companies build internal chat interfaces (sometimes called “custom GPTs”) to pull up confidential product data, summarize policies, or draft official emails. With RFT, these tools align with the latest company rules and preferred communication style.

This deployment ease accelerates value delivery, as companies no longer need to run large language models on private infrastructure – OpenAI handles hosting and inference.

Potential Use Cases for Enterprise RFT

RFT’s flexibility makes it ideal for tasks where accuracy, compliance, or custom style matter most. Early adopter feedback highlights tangible benefits for tasks with structured outputs, technical detail, or nuanced tone.

- Financial and tax document analysis and reasoning

- Medical code mapping or regulatory reporting

- Automated legal document review and extraction

- Internal knowledge base search and content moderation

- API code generation for development and integration workflows

Any enterprise process involving complex business rules, specialized language, or strict security requirements can benefit by using an RFT model that “speaks” in-house terminology and adheres to company standards.

Companies in regulated industries such as healthcare, finance, and law have especially strong use cases. Real-world RFT deployments already reflect these focuses.

For any team with repetitive, information-rich tasks, RFT offers measurable time savings and fewer manual checks – provided training and grading are carefully defined.

Also Read

Square Enix Symbiogenesis Expands on Sony Soneium Blockchain

Early Industry Examples of RFT Impact

Several organizations have already reported strong results from custom RFT with o4-mini.

- Accordance AI improved tax analysis accuracy by 39%, surpassing leading baseline models in benchmark tests.

- Ambience Healthcare boosted medical code assignment by 12 points over physician-led baselines on review panels.

- Harvey’s legal workflows saw a 20% F1 score gain in citation extraction speed, rivaling the accuracy of top public models while being faster.

- Runloop increased syntactic and code validation accuracy by 12% in Stripe API generator tasks.

- Milo raised complex scheduling correctness by 25 points under real-world operating conditions.

- SafetyKit pushed nuanced content moderation F1 scores from 86% to 90% in live production.

- The approach has scaled to other areas like legal document comparison, data structuring, and process verification, with partners such as Thomson Reuters and ChipStack reporting reliable gains.

All reported projects shared a clear methodology: strongly defined task criteria, measurable results, and high-quality grading. This approach has proven to be key for enterprise success in RFT deployments.

Performance, Benefits, and Unique Advantages of RFT

Organizations adopting RFT benefit from a far greater level of model adaptation and expressiveness compared with standard fine-tuning. Enterprises can embed internal rules, reflect custom vocabularies, and support even niche business practices directly in the model’s output.

The “grader” mechanism is especially beneficial: By weighting multiple responses, companies can tune for tone, factuality, policy, and compliance simultaneously, instead of being restricted to a single “correct” answer approach.

Also Read

Last Chance to Exhibit at TechCrunch AI Sessions at Berkeley

Efficiency is another core advantage. With API-based integration and central dashboard controls, most companies finish first-pass RFT jobs within days, rather than months of building from scratch or deploying separate infrastructure.

Ultimately, RFT offers a clear pathway for any business aiming to put language models into production without massive engineering resources. It increases both speed to deployment and business alignment, which helps leaders justify investment for internal tooling.

Risks: Jailbreaks, Hallucinations, and Security Notes

Fine-tuned models can bring their own risks, especially in mission-critical or high-compliance settings. Industry research suggests that RFT models, like all fine-tuned language models, may become more susceptible to “jailbreaks” (unexpected or policy-violating outputs) or hallucinated answers if not carefully designed and monitored.

This is because the model, when tuned to highly specific goals or relaxed evaluation, may learn to satisfy the grader at the expense of prior safety filters. For high-stakes deployments, teams must audit grader logic and put secondary checks in place – especially when the model will interact directly with regulated or confidential material.

Also Read

Florida Encryption Backdoor Bill for Social Media Fails to Pass

OpenAI recommends a conservative approach: Always review output during pilot phases, audit for compliance, and use layered monitoring before deploying broadly. Combining RFT with other validation layers can help reduce operational and reputational risks.

Selecting appropriate graders – whether company-built or model-based – remains vital for keeping models on track and minimizing unintentional behaviors. If possible, companies should keep grading logic transparent and update it as business rules evolve.

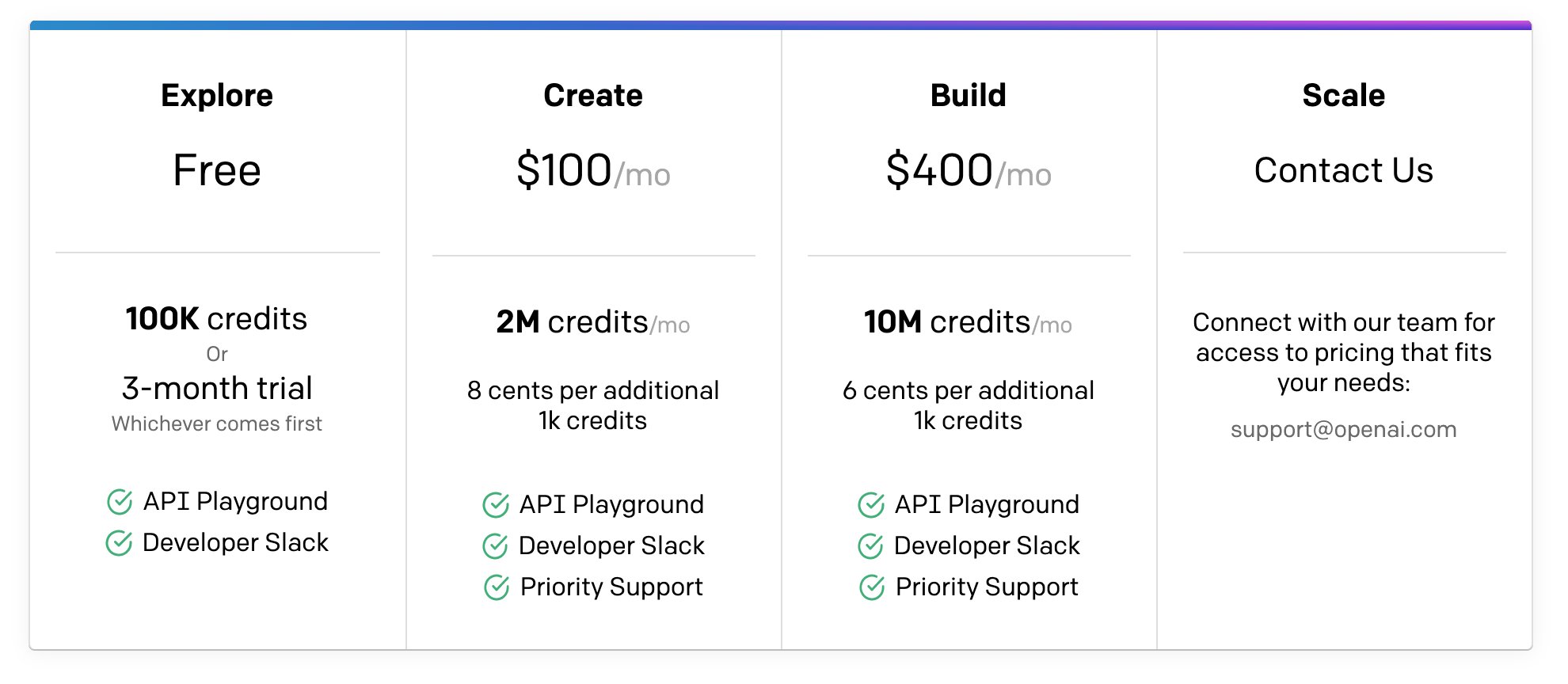

Pricing and Billing for Reinforcement Fine-Tuning

RFT is priced differently than previous OpenAI fine-tuning options. Charges are based on time spent actively training, not by the number of tokens processed. The current rate is $100 per hour of core training time, calculated by wall-clock model rollouts, weighing, grading, and validation.

Billing is highly granular, with time prorated by the second (rounded to two decimals), so shorter jobs can be significantly less expensive than longer runs. Customers pay only for time spent training – setup, queueing, and idle time are not charged.

Also Read

Apple’s New Chips Target Smart Glasses, Macs, and AI Hardware

Separate billing applies if OpenAI’s models (such as GPT-4.1) are used as “graders,” with those API tokens billed at standard rates. Organizations can choose third-party or open-source grading models to control this aspect of costs.

This structure gives enterprises strong cost visibility and rewards efficient pipeline and data design. All pricing details and cost breakdowns are visible from the dashboard during job setup.

How to Optimize Cost and Efficiency When Using RFT

OpenAI encourages users to optimize RFT expenses by making smart choices about dataset sizing, grader complexity, and workflow monitoring. Here are core tips for keeping budgets in check:

- Start with a small, well-defined dataset and experiment with brief runs before committing to larger jobs.

- Use lightweight, efficient graders when possible – overly complex grading logic drives up training time and cost.

- Avoid frequent validation unless minor improvements are absolutely required, as this can slow down runs.

- Monitor jobs in real time via API or dashboard and be prepared to pause, stop, or adjust training mid-run if results are straying from expectations.

The platform uses a “captured forward progress” billing approach, charging only for completed, successful model training steps. This means any lost or interrupted work is not included in your invoice.

Also Read

Widespread Timeline Issues Hit X as Users Report Outages

By iterating thoughtfully and calibrating resources, many teams have reported substantial savings, especially on early test runs or narrow task experiments.

OpenAI’s Data Sharing Discount and Incentive Program

For a limited period, OpenAI is offering a 50% discount to teams willing to share their training datasets back with OpenAI for future model improvement. This incentive is part of a collaborative effort to help build more accurate and capable models for all users.

Data submitted under this arrangement will be used for research and engineering purposes, but OpenAI has committed to privacy and data integrity throughout the process. This is entirely voluntary – enterprises can choose private, undisclosed training as an alternative, with no impact on job setup or delivery features.

The discount can be substantial, particularly on large-scale or repeated RFT projects. Full details, eligibility, and participation instructions are clearly outlined in the OpenAI fine-tuning dashboard and official documentation.

Also Read

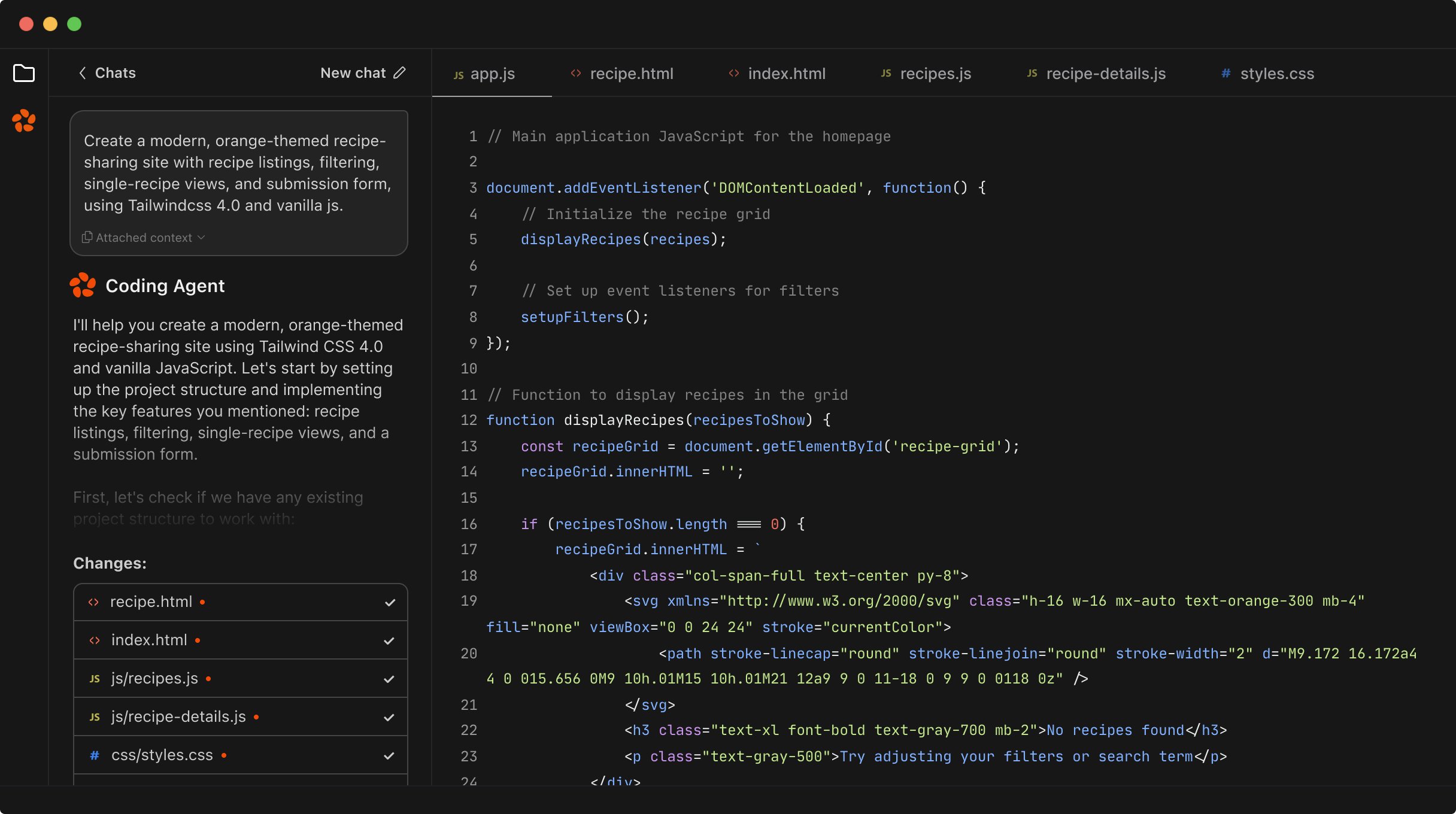

Zen Agents by Zencoder: Team-Based AI Tools Transform Software Development

Getting Started: Documentation, Requirements, and Dashboards

Developers ready to try reinforcement fine-tuning with o4-mini can find all necessary documentation and guides on OpenAI’s official developer hub. The step-by-step process – from dataset creation to grader scripting and API deployment – is available under the fine-tuning section.

All RFT tools are accessible via OpenAI’s dashboard for verified organizations. Registration and organization validation are required to access the fine-tuning features and pricing calculator.

The documentation also covers best practices, early case studies, support channels, and known limitations of the current RFT system. Helpful examples and scripts are included to accelerate onboarding for teams new to enterprise AI customization.

Training and troubleshooting tips, sample grading logic, and performance monitoring strategies are updated regularly. For first-time users, the OpenAI community forum and support portal offer additional troubleshooting resources.